Nginx proxy with AWS ELB: Critical handling for intermittent gateway timeout ( 499 or 502 )

This post describes important handling that needs to be put in the Nginx to avoid getting intermittent gateway timeouts during a proxy pass to an AWS ELB. Please read on to see the issue and the handling required for avoiding it.

The Issue

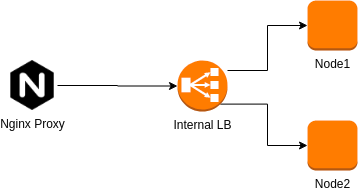

We are using Nginx as a reverse proxy and proxies the requests based on the configuration to the application using an AWS ELB.

# Configuration for the proxy passing to the internal lb

location /api {

proxy_pass http://internal-lb.ap-south-1.elb.amazonaws.com:8080;

proxy_redirect http://internal-lb.ap-south-1.elb.amazonaws.com:8080 http://www.example.com;

}

We were facing issues of gateway timeout intermittently ( maybe over a week or 2 weeks time ) . The service was accessible using individual instance IP as well as the internal load balancer URL. The issue of timeout goes off when we restart the Nginx.

During our investigation, we found that in the Nginx error logs, there was a reference to the resolved IP of the ELB giving connection timeout. When checked in the AWS console under “Network Interfaces”, there were no such IP assigned to the internal LB.

The root cause

With some googling and checking the documentation for the ELB, we came to understand that the IP for the AWS LB can get changed at times ( no specific timeframe ). But the Nginx behaviour is to resolve the DNS once during the time of the startup and use the resolved IPs. This caused the timeouts as the IPs got changed at the LB and Nginx was still using the old resolved IPs when trying to contact the service. Once we restart Nginx, it was resolving the IPs again and proxied to the new IP.

The Solution

There are several posts in the AWS forum regarding this dynamic IP behaviour and none of them has addressed this issue to a proper resolution. Most of the posts were advised to use Route 53 ( the default DNS provider for AWS) which has got handling for this case due to direct integration with the LBs. But this was not an option for us as there were different rules configured in the Nginx.

Finally, we came across some stackoverflow.com answers and reference posts which directed us towards the “resolver” directive of the Nginx. This allows us to provide a DNS resolver address and a custom TTL value for this. It ensures that the DNS will be resolved after specified TTL is over without requiring any restart or reload.

MICROIDEATION APP: Programming and tech topics explained as quick learning cards ( ideations ) .

We have launched our new mobile app for learning programming and other tech-based topics in under 30 seconds. The content is crafted by subject-matter-experts on the field and each topic is explained in 500 or fewer characters with examples and sample code. You can swipe for the next content or freeze and follow a particular topic. Each content is self-contained and complete with links to related ideations. You can get it free ( no registration required ) in the play store now.

Working configuration

Following is the working configuration that we need to use for the fix. It uses the configuration above with the required changes.

# Configuration for the proxy passing to the internal lb

location /api {

# Define the resolver address and a ttl

resolver 10.30.0.2 valid=30s;

# Define a variable for the load balancer. This is important as nginx won't consider the

# lb address to resolve without a variable

set $internallb internal-lb.ap-south-1.elb.amazonaws.com:8080;

# Configure proxy_pass with variable in the url to enforce DNS resolution

proxy_pass http://$internallb;

# Configure proxy_redirect with variable in the url to enforce DNS resolution

proxy_redirect http://$internallb http://www.example.com;

}

In the above configuration, we are using the “resolver” directive of Nginx. Specifying this directive will make sure that the hostname entries used in the respective block will use the specified DNS resolver for identifying the correct IP address.

Please refer the section below to see how to identify the resolver IP address.

We can also specify this on the server block or the http block of the nginx configuration to make it applicable for all the configuration. Putting it under the location makes it applicable to the current block only.

Important Caveat

It is very important to note that, just providing the resolver in the block is not enough. Nginx may not resolve the DNS for the hostnames still. We need to make sure that the hostname is put as a variable and then used in the URL. This enforces the DNS is resolved based on the resolver configuration used.

Getting the IP address for resolver directive

One of the biggest questions we had, when we saw the solutions online, was on how to identify the IP that we need to use for the resolver directive. We finally identified that this need to be DNS server IP of AWS for the current VPC.

By default, the second IP of the VPC in the CIDR range is reserved for the nameserver of that VPC. For eg: If your VPC CIDR is 10.30.0.0, then the IP for the nameserver would be 10.30.0.2. Alternatively, if you are using Linux based instances, you can log in to the servers/instances where the Nginx is running and get the same using below command:

$vi /etc/resolv.conf ; generated by /usr/sbin/dhclient-script search ap-south-1.compute.internal nameserver 10.30.0.2

The nameserver server IP will be on the last line as seen above

Footnotes

This seems to be a very critical item that needs to be handled when your stack includes Nginx and AWS ELB. I am not sure if Nginx is supposed to handle DNS resolution better or whether AWS ELB shouldn’t be changing the IP address for LBs. Both of them may have design restrictions or decisions supporting their behaviour. But for a modern system to have reliable connectivity and reduced downtime, it important that all these components work as expected.

References

- https://github.com/DmitryFillo/nginx-proxy-pitfalls

- https://stackoverflow.com/questions/40200938/varnish-nginx-elb-499-responses/40357588#40357588

very usefull!

Hello

My query is, I am using a public load balancer so should I still use the VPC DNS Server IP as a resolver?

Please suggest.